🔗 LLM Supply Chain: Who’s Messing with My AI Ingredients? (OWASP LLM Top 10)

Welcome back, cyber enthusiasts! 🔥 In today’s AI LLM Red Teaming series, we’re diving into another juicy vulnerability from the OWASP Top 10 for LLMs: the Supply Chain.

If you think supply chains are just for shipping products, think again! For LLMs, the supply chain covers everything training data, pre-trained models, fine-tuning adapters, and even deployment platforms. And, oh boy, the risks are everywhere. 🫠

What is the LLM Supply Chain?

Imagine you’re hosting a potluck dinner. Your friends bring ingredients: veggies, sauces, and desserts. But what happens if Bob’s brownies have peanuts (oops, allergies), Jane’s tomato sauce has gone bad, and someone sneaks in a “special ingredient” you didn’t ask for? 🤔

That’s the LLM Supply Chain in a nutshell. It’s the series of external components like datasets, pre-trained models, and adapters that you rely on to create your AI masterpiece. But each step introduces risks: tampering, poisoning, outdated code, or even straight-up malware.

Risks: How Your Potluck (AI) Goes Wrong

Here are some real risks you face in your LLM supply chain:

🍞 Outdated Libraries (Stale Bread)

Using old or unmaintained libraries is like using expired milk-it works until it doesn’t. Attackers love exploiting outdated dependencies to sneak in malware or backdoors.

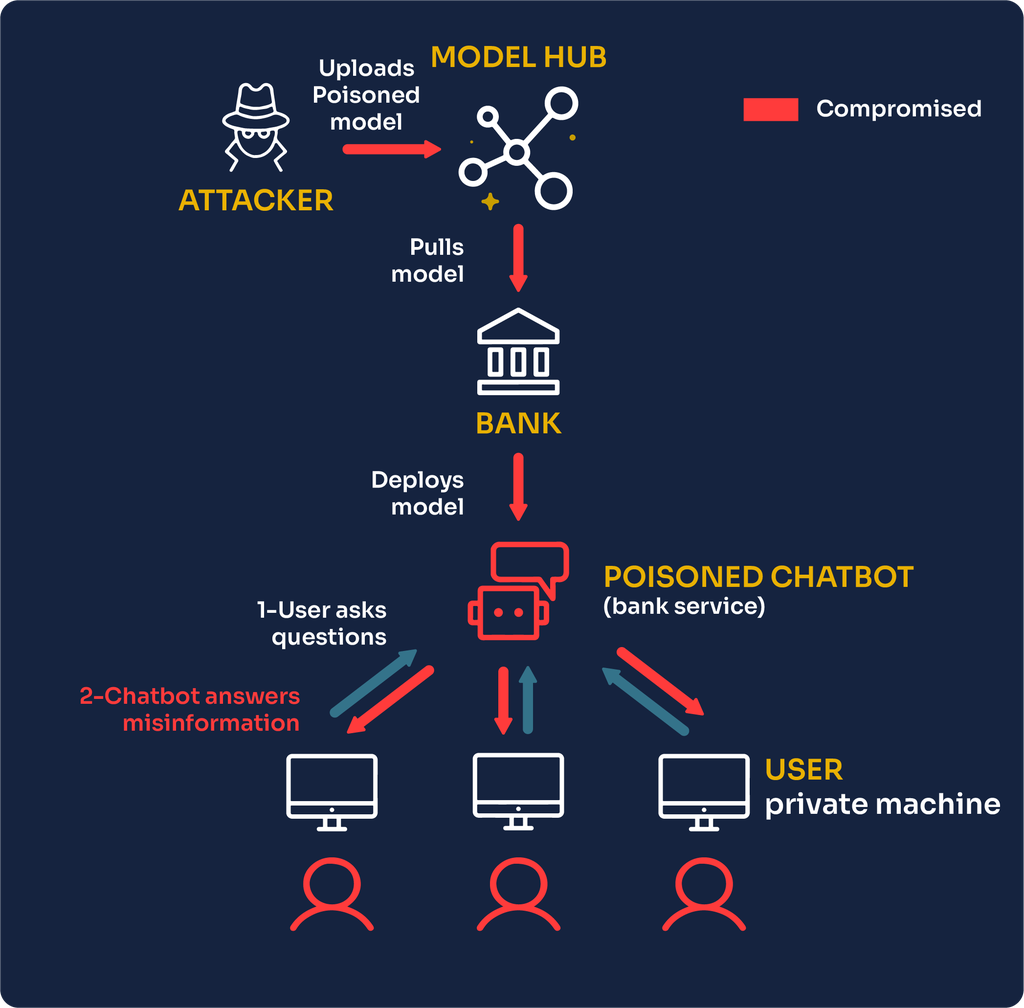

🥷 Malicious Pre-Trained Models (The Trojan Dish)

Pre-trained models save time but come with hidden surprises. They might include backdoors, biases, or even malicious code, making them the AI version of a Trojan horse.

🪤 LoRA Adapters (Too Good to Be True)

LoRA adapters are efficient fine-tuning tools, but they can be tampered with. Think of them as a “friend” offering to help, but secretly sabotaging your work.

☁️ Cloud Vulnerabilities (The Party Crasher)

Hosting LLMs on shared cloud platforms is great until attackers exploit virtualization layers or firmware vulnerabilities to access your sensitive data.

🧾 Licensing Drama (Legal Trouble)

Using datasets or tools without fully understanding their licenses? That’s a lawsuit waiting to happen. AI licenses are tricky, and ignoring them could cost you big.

Real-Life Cases: The LLM Drama is Real

PoisonGPT

A tampered model on Hugging Face exploited safety features and spread malicious outputs. It’s like swapping your spaghetti with worms and calling it “fine dining.”

PyTorch Dependency Hack

A Python library was compromised, infecting entire AI pipelines. This is why “free” doesn’t always mean safe.

Fake WizardLM Model

Attackers uploaded a malware-infested clone of a popular model. Think of it as buying a knockoff Rolex that secretly tracks your bank details.

Mitigations: How to Keep the AI Kitchen Safe

1️⃣ Vet Your Ingredients

Always verify the source of your libraries, datasets, and pre-trained models. Trust, but verify.

2️⃣ SBOMs (Software Bill of Materials)

Track every component in your LLM’s “recipe” to ensure nothing shady sneaks in.

3️⃣ Encrypt and Verify

Use encryption and integrity checks to ensure your models and adapters haven’t been tampered with.

4️⃣ Red Teaming

Simulate attacks on your supply chain to find weaknesses before attackers do.

5️⃣ Update and Patch Regularly

Don’t let outdated components become your Achilles’ heel. Keep everything fresh.

6️⃣ Use Provenance Tools

Verify model integrity with signing and file hashes. Think of it as the AI version of a security seal.

Final Thoughts

The LLM supply chain isn’t just a backend process it’s a critical vulnerability if left unchecked. With attackers targeting every step from training data to deployment, securing your AI systems is more important than ever.

So, whether you’re building the next GPT or fine-tuning a chatbot for your app, don’t let bad ingredients ruin your masterpiece. Secure your supply chain, test thoroughly, and remember: the best AI systems are built on trust and vigilance.